Feedstack: Bridging the gap between unstructured feedback and visual design principles using Large Language Models

Accepted into ACM Conversational User Interfaces (CUI) 2025 in Waterloo, Canada.

Feedstack is a hypothetical system designed with LLM-driven novel interaction techniques to externalize underlying high-level principles within real-time feedback conversations.

Scope

Jun 2024 - Jan 2025

Funded by

National Science Foundation (NSF) REU Program, Temple University Human-Computer Interaction Lab

Roles

Research Lead, Design Lead

Team

Hannah Vy Nguyen, Yu-Chun Grace Yen, Omar Shakir, Hang Huynh, June A. Smith, Sheila Jimenez, Sebastian Gutierrez, Stephen MacNeil

Tools

Figma, Google, Overleaf/LaTeX

Abstract

Non-experts often struggle to interpret and apply unstructured feedback, whether it is provided by human experts or Large Language Models (LLMs). They also find it challenging to map feedback to high-level principles essential for effective learning.

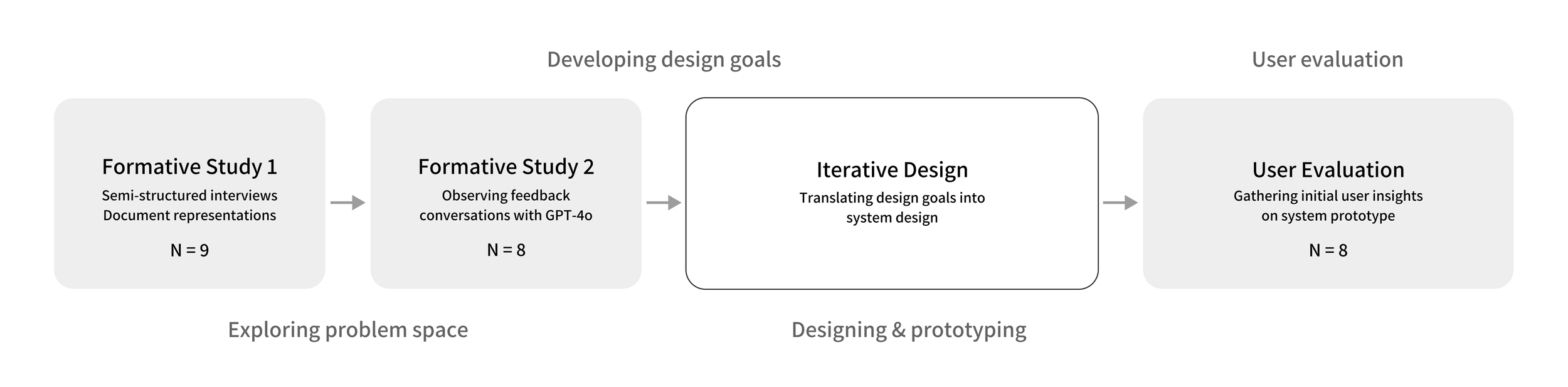

To address this challenge, we present Feedstack, an interactive system designed to bridge this gap by creating bidirectional mappings between direct feedback and abstract visual design principles. Feedstack's design is informed by two formative studies (N = 17), including semi-structured interviews and observations that explored how students request and receive feedback using large language models (LLMs). These two formative studies directly informed our system's design. We evaluated the Feedstack prototype through a user study (N = 8), where participants engaged with the system and reflected on its ability to structure feedback. Post-study surveys and interviews offered additional insights into how structured feedback, informed by high-level design principles, affected their experiences and feedback exchange. Our contributions include a conceptual framework and novel affordances for feedback structuring, aimed at helping non-experts better understand and apply high-level principles consistently across lengthy unstructured feedback conversations. This research contributes to advancing interactive feedback systems, particularly in educational contexts where real-time design feedback is critical for learning and skill development.

What was my role as Research Lead / first-author?

Project Manager

Lead a team of 7 undergraduate research assistants

Coordinate the Institutional Review Board (IRB) application process, gathering documentation and ensuring human-subjects research abides by protocol

Coordinate weekly meetings with the team, external collaborators, and principal investigator

Lead Researcher

Coordinate the development of study materials, designing surveys and interview questions and user studies

Conduct 25 total user studies over the course of 2 months, meeting synchronously with participants via video conferencing

Manage literature review, reading organizing 80+ prior research articles

Guide research assistants in academic writing, data collection, and recruitment

Lead Designer

From research findings, developed design goals and iteratively created low-fidelity prototypes with PI

Design and iterate upon novel user interface interactions

Translate designs into high-fidelity interactive prototypes on Figma

Guide research assistants in navigating Figma and FigJam files

Background & Challenge

Feedback plays a vital role in learning, particularly in design education where iterative refinement is key.

However, non-experts often struggle with unstructured feedback, finding it difficult to prioritize comments or connect them to underlying design principles.

Prior research shows that structured feedback, which ties comments to specific learning goals, improves comprehension and application compared to vague or open-ended feedback.

Several tools, such as Decipher and Voyant, have been developed to structure feedback and enhance learning. GenAI has been on the rise over the recent years, becoming a critical tool in help-seeking among students. Our proposed approach addresses this gap by linking feedback to broader design concepts, helping students better understand and apply the feedback they receive.

We aim to explore: How might we externalize design principles to guide non-expert designers when they receive unstructured feedback in real-time conversations?

Defining our research goals

Research Goal 1Investigating students’ preferences for feedback structure in both human-human and AI-mediated feedback conversations

Research Goal 2Exploring how non-experts engage with our system features designed to connect feedback instances to high-level principles

Designing a research plan

Formative Study 1: Exploring the problem space

Unstructured feedback is a challenge.

To probe the challenge of unstructured feedback further, I aimed to explore how student feedback receivers perceive unstructured feedback conversations. Using university mailing lists and Slack channels, I recruited 9 student participants who recently engaged in feedback conversations.

No prior experience with feedback systems was required, as we wanted to explore organic conversational feedback experiences.

Over 2 weeks, I conducted 1:1 semi-structured interviews with 9 participants (5 male, 4 female), who were undergraduate and graduate students representing diverse fields of study such as Computer Science, Psychology, and Music. This cross-disciplinary representation was intentional to capture a broad range of perspectives on conversational feedback.

In a collaborative qualitative coding process, I worked with another research assistant to identify recurring themes.

Formative Study 2: Design feedback with GPT-4o

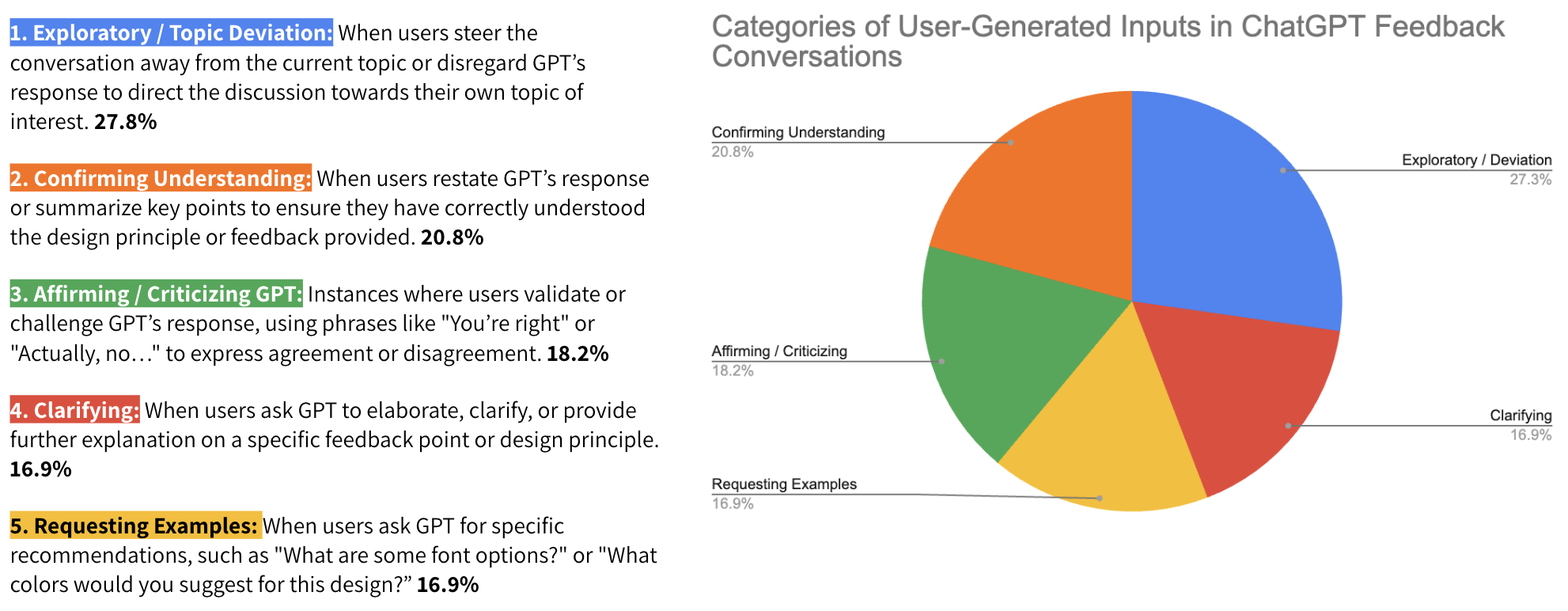

In our second formative study, I aimed to answer the following question: How do non-expert designers interact with ChatGPT interface for design feedback? Previously, help-seeking via GPT for educational purposes has been thoroughly researched. However, the research gap lies in visual feedback.

Scroll-backs are inefficient. Participants noted the negative experience of having to scroll back on the GPT interface to reference the initial design at the beginning of the conversation.

Diverse conversational turns. Participants tended to pivot from the feedback conversation.

I ran the user study with 8 participants over 2 weeks. Here’s what I found:

Translating Findings into Design Goals

Based on the findings from the Formative Studies (F1-F2), we defined four key design goals to inform our system design.

D1. Restructuring complex feedback content to simplified content.

D2. Two-way mapping between feedback instances and design principles.

D3. Supporting feedback exploration and reflection.

D4. Enabling efficiency of feedback reception.

Iterative Design & Prototyping

User Study: Evaluating the Design

We completed a lightweight, exploratory user study to gather feedback. View the poster below (presented at CUI 2025 in University of Waterloo, Waterloo, Canada) to learn about the results!